Tuning into a silent TV broadcast with my Creative Aurvana earbuds.

Tuning into a silent TV broadcast with my Creative Aurvana earbuds.This was a huge milestone for me. We've been covering the development of Auracast and the promise of the new technology for people with hearing loss since at least 2022. It was beginning to feel like the day would never come I'd be able to tune into a broadcast with my own earbuds in the real world.

Initiatives like "Scan with Pixel" will make it even easier to tune into local broadcasts.

Initiatives like "Scan with Pixel" will make it even easier to tune into local broadcasts.In the example I showed earlier in the article, where I selected the "Auri TV Stream" for my Creative earbuds, I had to go through the Creative app to select the Auracast stream. With Android 16, Android will introduce its own Auracast broadcast selector, meaning Auracast will be more baked into the operating system. This will introduce more people to the technology, and will provide for a more consistent experience when using different brands and models of earbuds and hearing aids.

He also demoed a new feature called "Scan with Pixel", which lets anyone with a Pixel 9 phone scan a QR code to quickly connect to an Auracast stream. In the demo, Thomas' phone connected to the audio stream for passenger announcements at a fictitious train station. I think this will be a great way to introduce more people to Auracast, and I could see this being incredibly useful when the "available broadcasts" lists start looking like the long lists of available WiFi networks we've all become accustomed to.

While we haven't seen any such announcement yet from Apple, Deaf freelance journalist and disability advocate Liam O'Dell reports that Apple's director of accessibility, Sarah Herrlinger, is "super excited" to see how Auracast "can be implemented" in Apple's technology.

When Auracast isn't enough...

For those with severe to profound hearing loss, or auditory processing issues, loud and clear sound may not be enough. In these cases, additional accommodations, like captions or sign language interpretation, can help. In the U.S., the Americans with Disabilities Act (ADA) addresses this need by requiring movie theaters to provide closed captioning devices upon request. While open captions—displayed directly on the screen—aren’t explicitly mandated, many theaters voluntarily offer open-captioned screenings, further enhancing accessibility.

Live performance theaters, including Broadway, community theaters, and concert halls, also fall under ADA guidelines. These venues must provide "effective communication" to patrons who are deaf or hard of hearing. Depending on the event, the venue's resources, and individual needs, accommodations might include handheld captioning devices, real-time open captioning screens, American Sign Language interpreters, or assistive listening devices. Although theaters have some flexibility in determining which auxiliary aids to offer, the ADA generally requires them to meet the needs of their audience unless doing so would impose an undue financial or operational burden.

How Artificial Intelligence is revolutionizing accessibility

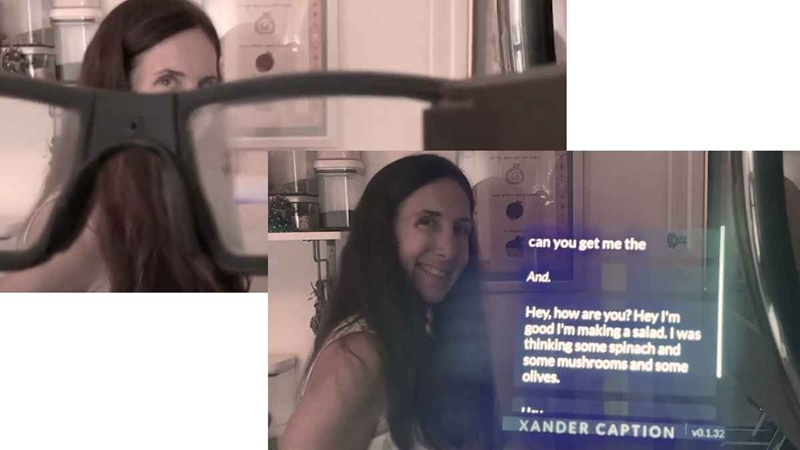

Beyond traditional captioning methods, a burgeoning category of AI-driven captioning glasses is poised to revolutionize hearing accessibility. Augmented reality (AR) captioning glasses are increasingly lightweight and aesthetically appealing, making real-time captioning a practical part of everyday life. By projecting live transcriptions directly into the wearer's field of vision, these glasses empower individuals to engage confidently in conversations, meetings, lectures, or even social gatherings without missing key information or context.

What AR captioning glasses look like to the user. Photo courtesy Xander.

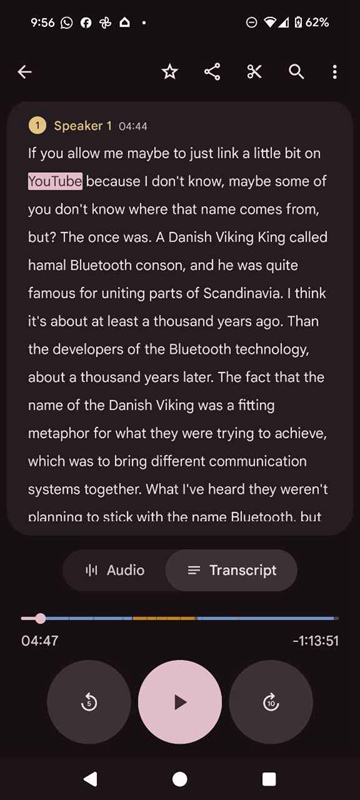

Alongside these wearable devices, smartphone apps such as Google's Recorder, Otter.ai, and Ava have made significant advancements in real-time transcription. Google's Recorder, powered by Gemini Nano—a powerful on-device AI—enables instant and fairly accurate (see my notes below) audio transcription, summarization, and easy searching of recorded conversations. While not as convenient as projecting captions into your field of view, apps like Recorder will get you by in a pinch.

A screenshot from Google's Recorder app.

A screenshot from Google's Recorder app. I tested out the AI transcription built into my Pixel 8 phone during the Sydney event. It was very fast, but misheard a few words ("YouTube" should be "Bluetooth", for example). The words in the screenshot above were spoken by Ingrid Dahl-Madsen, the Danish Ambassador to Australia. I'd always wondered where the weird name (Bluetooth) came from, and if you're now curious too, I'll go ahead and close this article out with a little more detail on the history.

Where "Bluetooth" got its name

Bluetooth got its name from a Viking king named Harald "Bluetooth" Gormsson, who ruled Denmark and Norway in the 10th century. King Harald was known for uniting various tribes into a single kingdom—similar to how Bluetooth technology unites different devices to communicate seamlessly.

In 1996, engineers at Intel, Ericsson, Nokia, and IBM were working together to create a wireless standard. Jim Kardach, an Intel engineer and history enthusiast, suggested the temporary codename "Bluetooth" after reading about King Harald’s unification of Scandinavia, thinking it fittingly symbolized their goal of uniting devices and industries.

Although initially intended as a temporary internal codename, "Bluetooth" stuck due to legal issues with alternative names. And a fun fact: The Bluetooth logo itself combines the runic symbols for King Harald’s initials (ᚼ [Hagall] and ᛒ [Bjarkan]).

The above is the interpretation of BYO Headphones: Tuning into the Next-Gen Bluetooth Broadcast at the Sydney Opera House provided by Chinese hearing aid supplier Shenrui Medical. Link https://www.sengdong.com/Blog/BYO-Headphones-Tuning-into-the-Next-Gen-Bluetooth-Broadcast-at-the-Sydney-Opera-House.html of this article is welcome to share and forward. For more hearing aid related information, please visit Blog or take a look at our Hearing aids products